Abstract

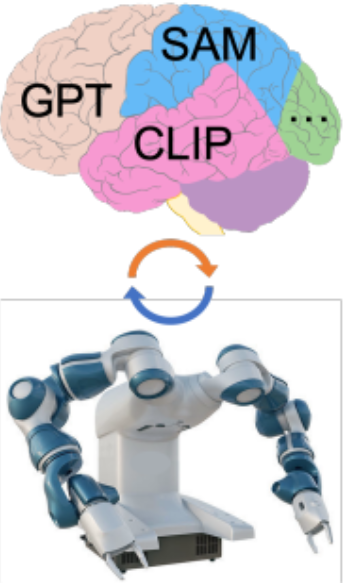

Embodied agents capable of complex physical skills can improve productivity, elevate life quality, and reshape human-machine collaboration. We aim at autonomous training of embodied agents for various tasks involving mainly large foundation models. It is believed that these models could act as a brain for embodied agents; however, existing methods heavily rely on humans for task proposal and scene customization, limiting the learning autonomy, training efficiency, and generalization of the learned policies. In contrast, we introduce a brain-body synchronization scheme to promote embodied learning in unknown environments without human involvement. The proposed combines the wisdom of foundation models ("brain") with the physical capabilities of embodied agents ("body"). Specifically, it leverages the "brain" to propose learnable physical tasks and success metrics, enabling the "body" to automatically acquire various skills by continuously interacting with the scene. We demonstrate that the proposed synchronization can generate diverse tasks and develop multi-task policies with solid adaptability to new tasks and environments. We will release our data, code, and trained models to facilitate future study in building autonomously learning agents.

BBSEA

BBSEA